Prepare for a Long Battle against Deepfakes

(This article originally appeared on KDNuggets.com here. For more, visit https://www.kdnuggets.com/)

While deepfakes threaten to destroy our perception of reality, the tech giants are throwing down the gauntlet and working to enhance the state of the art in combating doctored videos and images.

When Stephen Hawking warned of the dangers of Artificial Intelligence in 2015, his concerns were about the Superhuman AI that would pose an existential risk to humanity. But in recent years, much more imminent danger of AI has emerged that even a genius like Hawking could not have predicted.

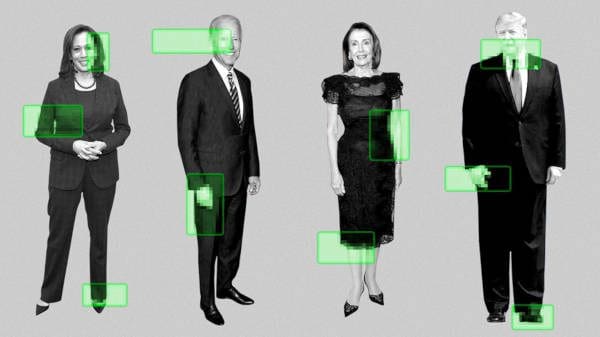

Deepfakes depict people in videos they never appeared in, saying things they never said and doing things they never really did. Some of the harmless ones have the actor Nicolas Cage’s face superimposed on his Hollywood’s peers while the more serious and dangerous ones target politicians like the US House Speaker Nancy Pelosi.

Deeptrace, a cybersecurity startup based in Amsterdam found 14,698 deepfakes in June and July, an 84% increase since December of 2018 when the number of AI-manipulated videos was 7,964.

With 2020 being the election year, the prospect of deepfake swaying public opinion has many American lawmakers worried. The Senate Intelligence Committee in a letter has called on tech giants like Facebook, YouTube, and Twitter to implement procedures that will discourage users from sharing such fraudulent content.

"As deep fake technology becomes more advanced and more accessible, it could pose a threat to United States public discourse and national security,” the letter states.

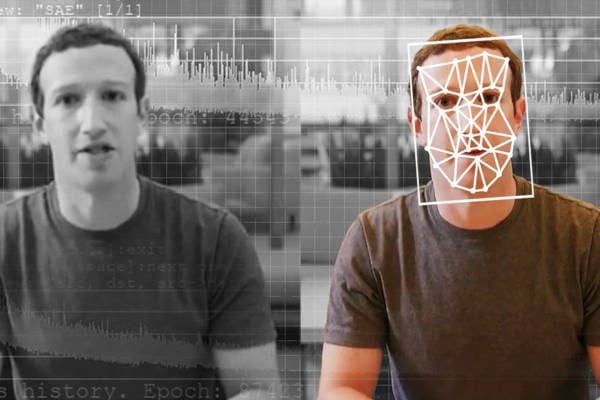

Facebook’s Deepfakes Detection Challenge

In its effort to curb deepfakes, Facebook has teamed up with Microsoft, Amazon Web Services, the Partnership on AI and academics from University of Oxford, MIT, Cornell Tech, University of Maryland, UC Berkeley, State University of New York and College Park – for a Deepfake Detection Challenge that was announced back in September.

For this challenge –Facebook recruited actors and created a set of deepfakes that developers can use to design a detection tool. “No Facebook user data will be used in this data set.” The website makes it clear, keeping in mind its history of mishandling customer data.

The competition that was officially launched on 11th December at the AI conference NeurIPS will run through March 2020. Facebook has dedicated $10 million to fund this entire project.

Facebook itself was involved in a deepfake scandal back in June when a video of House Speaker Nancy Pelosi went viral. When Facebook refused to remove the doctored video that depicted Pelosi stumbling over her words - a deepfake of Mark Zuckerberg declaring “whoever controls the data, controls the future” emerged, as a test to Facebook’s deepfake policy.

It will be interesting to observe whether the effort on Facebook’s part will bear any fruits.

Twitter Seeks Public Input in Crafting a Deepfake Policy

In a set of Tweets in October – Twitter announced it was updating its rules to deal with ‘synthetic and manipulated media’ and sought public input. Later, it announced the first draft of its policy to address photo, audio, or video that has been altered and fabricated to mislead people.

Here’s what the social media website intends to do:

- place a notice next to Tweets that share synthetic or manipulated media;

- warn people before they share or like Tweets with synthetic or manipulated media; or

- add a link – for example, to a news article or Twitter Moment – so that people can read more about why various sources believe the media is synthetic or manipulated.

The company sought feedback on its policy on a survey that was active till the 27th of November. People also provided input through the hashtag #TwitterPolicyFeedback. Currently, the platform is assessing the feedback to make adjustments and incorporate its policy into the current Twitter Rules.

Google Releases 3000 of its Own Deepfakes

Similar to Facebook, Google has released a huge dataset of deepfake videos to help researchers develop deepfake tools. Hiring paid actors, the search engine giant filmed a number of scenes and used publically available deepfake creation techniques to create this vast dataset.

The goal of the company is to help researchers train automated systems in effectively and accurately detecting doctored videos. The search engine giant will add more videos in its dataset to combat a rapidly progressing technology. “Since the field is moving quickly, we'll add to this dataset as deepfake technology evolves, and we’ll continue to work with partners in this space.” The company said in its announcement.

With Facebook, Twitter, and Google there’s hope that a detection tool might soon be on the horizon. But until then –businesses, big and small, are worried about the threat posed by the deepfake technology.

A New Form of Cybercrime

Businesses lose billions to identity theft each year. And to make matters worse – criminals are now utilizing more advance techniques than simple domain thefts. In March of 2019, a U.K-based energy firm was a victim of a unique fraud in which the criminals used AI-based software to mimic a chief executive’s voice, demanding a deceitful transfer of €220,000 ($243,000).

The CEO of the company believed he was speaking to the chief executive of the firm’s German parent company when he was asked to transfer funds to a Hungarian supplier.

Investigators believe the culprits used to machine-learning technology to mimic the vocal melody and accent of the German chief executive.

Philipp Amann, head of the strategy at Europol’s European Cybercrime Center believes it's unclear whether this is the first attack using AI or whether similar attacks have occurred in the past and have gone unreported.

In any case, this application of deep learning is enough to set shock waves through the corporate world.

What Lies Ahead?

With the emergence of programs like FakeApp –it’s not just experienced programmers now that can create fake videos. The technology is available to the masses, and deepfakes are regularly surfacing across social media.

Although, it is reassuring that tech giants like Microsoft, Google and Amazon along with Twitter are leading the fight against doctored videos – AI behind deepfake is getting more and more sophisticated.

Experts believe the menace deepfakes need to be combated on multiple fronts. Giorgio Patrini, chief executive officer of Deeptrace, while speaking to Science Focus called for a shift in mindset. “We really need to rewire ourselves to stop believing that a video is the truth,” Patrini said.

As deepfake threatens to destroy our perception of reality, the time is ripe to undertake educational efforts on a mass scale. Perhaps instilling a bit of skepticism in the masses will save us from an epidemic of misinformation and deceit.

Stay in the loop.

Subscribe to our newsletter for a weekly update on the latest podcast, news, events, and jobs postings.